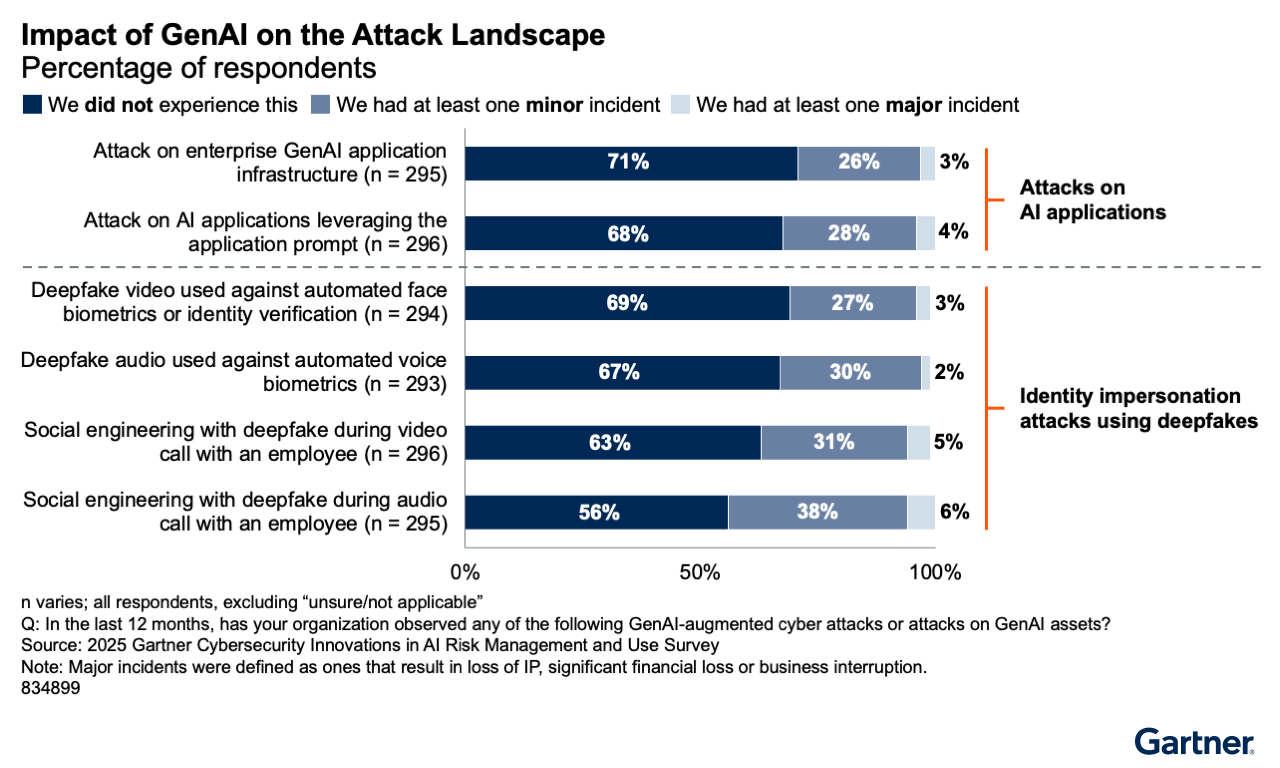

Generative AI (GenAI) has evolved from a convenient tool into a potent weapon for cybercriminals, who are now launching attacks of unprecedented scale and sophistication. A new study from Gartner underscores the severity of this threat, revealing that nearly 30% of organizations worldwide have experienced an AI-driven attack in the past year.

The study, which surveyed 302 cybersecurity experts across North America, Europe, the Middle East, and Asia-Pacific, details an alarming new threat landscape:

-

62% of companies were targeted by attacks leveraging deepfake technology, used for everything from sophisticated social engineering to the automated exploitation of business processes.

-

Audio deepfakes were the most prevalent, with 44% of organizations reporting fraudsters successfully impersonating colleagues over the phone. Additionally, 36% detected the use of deepfake video on conference calls.

-

The abuse of verification systems was also widespread, with 32% experiencing voice tampering to bypass biometric security and 30% encountering fake images during identity verification processes.

Beyond deepfakes, AI assistants have emerged as a primary target. These systems are vulnerable to adversarial prompts - manipulative queries designed to hijack large language models and force them to generate harmful content, divulge sensitive data, or bypass safety protocols. According to the survey, 32% of respondents have already fallen victim to such attacks.

The era of AI-powered threats is here. To defend against these sophisticated, automated attacks, legacy security measures are no longer sufficient. Organizations must transition their security infrastructure to a modern, cloud-native platform. Cloud infrastructure provides scalable computing power, advanced security tools, and real-time threat intelligence necessary to detect and neutralize these evolving threats.