Kafka Apache is a distributed, real-time messaging system between server applications. With its high bandwidth and scalability, it is used to handle large amounts of data.

Kafka was developed by LinkedIn. In 2011, the developer published the source code of the system. Since then, the platform has been developed and maintained as an open source project. Today, Kafka is a platform that provides redundancy enough to store huge amounts of data. It provides a message bus with enormous bandwidth, on which absolutely all data passing through it can be processed in real time.

How Kafka works

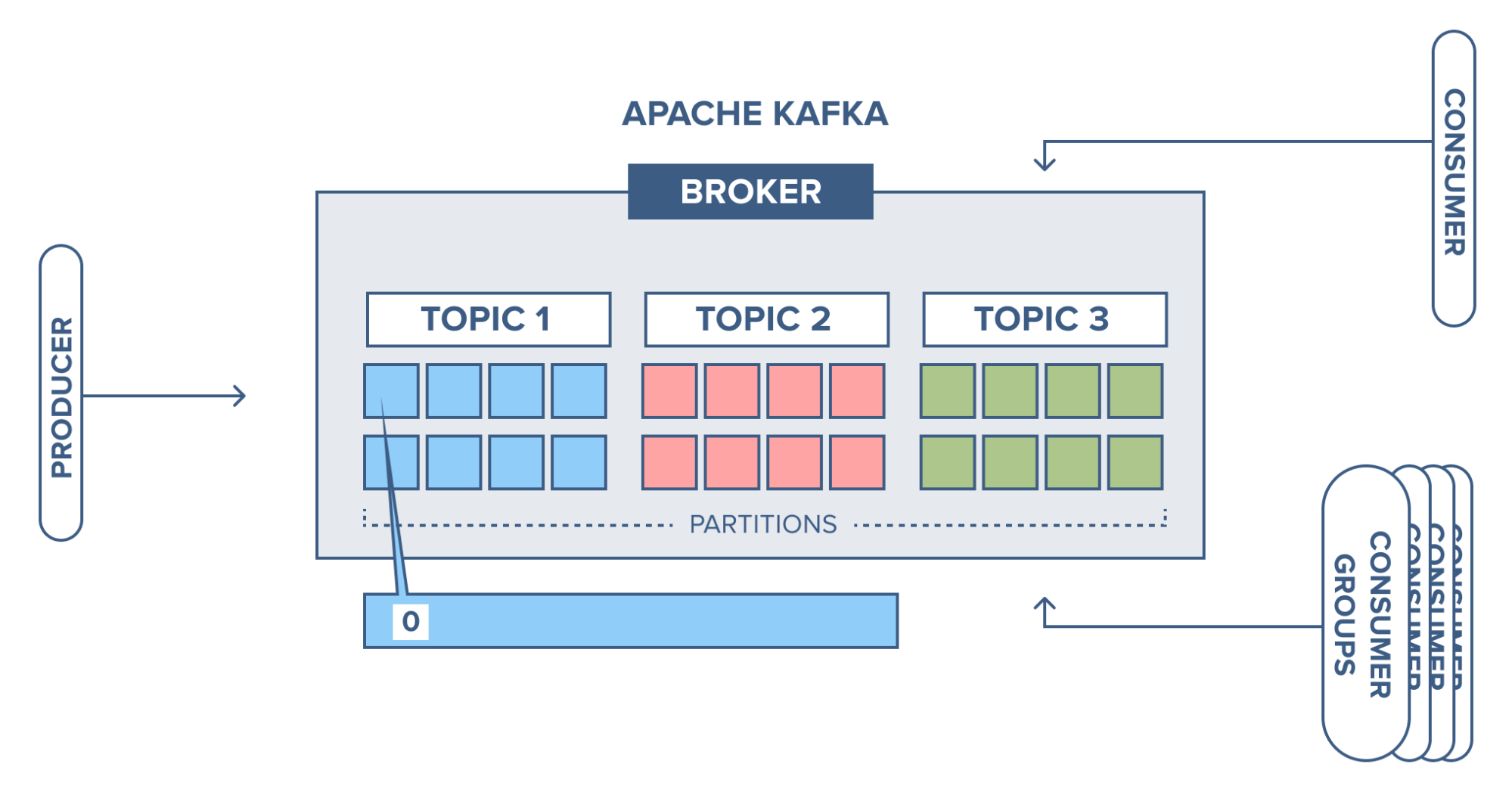

The key components in the Kafka Apache architecture include:

Producer – an application or process that generates and sends data.

Consumer – the application or process that receives the message generated by the producer.

Message – data package required to perform some operation (e.g. authorization, purchase, or subscription).

Broker – a node transferring a message from a process producer to a consumer application.

Topic – a virtual repository of messages with the same or similar content, from which a consumer application retrieves the required information.

Briefly, Kafka Apache works as follows:

- The producer application creates a message and sends it to the Kafka node.

- The broker stores the message in a topic to which consumer applications are subscribed.

- The consumer requests the topic, if necessary, and retrieves the desired data from it.

Benefits of Kafka

Open Source

Kafka is distributed under an open source license from the Apache Software Foundation and continues to evolve due to a large community of developers. Open-source enthusiasts improve the basic software and offer additional packages; develop various tutorials, manuals, and reviews. A large number of additional software packages, and patches from third-party developers, expand and improve the basic functionality of the system. Thanks to the flexibility of settings It is possible to adapt the system to the specifics of the project

Scalability

Apache Kafka can be scaled up by simply adding new machines to clusters without shutting down the entire system. This eliminates downtime associated with server capacity upgrades. The principle is more convenient than horizontal scaling with the need for additional resources: hard drives, CPU, RAM, etc. If necessary, the system can be easily downsized by excluding unnecessary machines from the cluster.

High performance

Since Kafka is a distributed system, it enables scalability according to data requirements. Thanks to Kafka Streams technology, it is possible to process large amounts of data. This is achieved because every time data is read from a certain topic, a new stream is created, which creates a topic for writing already read and processed data. Thanks to working with large RAID arrays of the server (Redundant Array of Independent Disks) data recording, reading, and processing are quite fast.

Fault tolerance and reliability

Kafka is a distributed messaging system with nodes contained in multiple clusters. Receiving a message from a producer, it replicates (copies) it, and copies are stored on different nodes. Thus, in case of an unexpected system failure, all information can be restored immediately and without loss.

When to use Apache Kafka

Kafka Apache is an effective tool for running server projects at any scale. Due to its flexibility and fault tolerance, it supports scenarios requiring high bandwidth and scalability; processing huge amounts of data from IoT, used in streaming video services, and Big Data analytics.